TechLetters #117 US cyber strategy and software liability; LastPass breach details; Privacy leaks in synthetic data; Cyberwarfare in Ukraine; Considerations for a TikTok ban

Security

Details of December LastPass breach. "targeted LastPass infrastructure, resources, employee ... valid credentials stolen from a senior DevOps engineer [used] to access a shared cloud-storage environment". Someone really wanted to breach LastPass. Employee home computer breached.

Fake air raid alert broadcasted in Russia. Russian Ministry of Emergency Situations confirmed that many commercial radio stations were hacked. As a result, a (fake) announcement was issued in many places in Russia, broadcasting about 'the upcoming air strikes and the threat of a missile attack'.

Data selling during war time. Ukraine's security service neutralised "a scheme of selling personal data of Ukrainian citizens". Telegram bot leaking private data. One month 'subscription' for $200.

China's State cyber operators targeted a Belgian member of the parliament. The release does not say if he has fallen for it or if it was merely a spearphishing attempt. The event is a likely response to criticising China's policy.

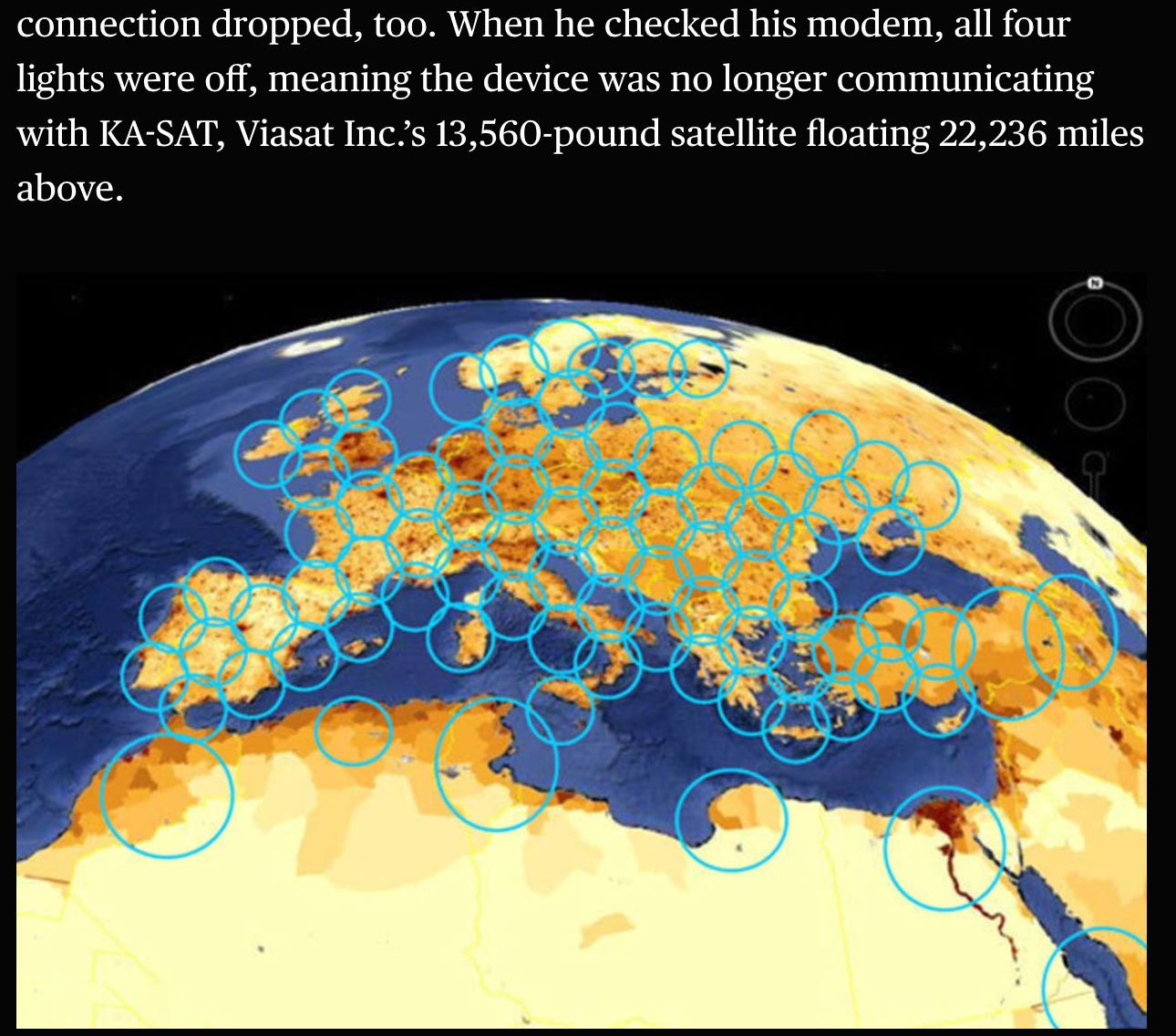

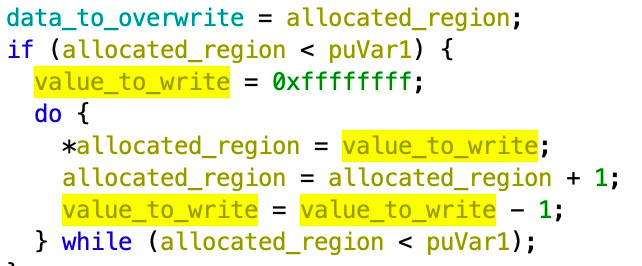

Cyberwarfare against space system. How the KA-SAT satellite cyberattack, accompanying Russian land invasion of Ukraine, happened. 13 countries affected (and Ukraine's military, and government). "Russia was testing ability to hack and destroy satellite systems". It was too easy?

New US cybersecurity strategy is impressive. They copy some ideas previously mandated in Europe, and maybe these will actually work in the US. They also want to "defeat ransomware", and introduce liability for bugs in software! Post-quantum resilience elevated to a strategic national priority. This is also the first in the world such a government document calling admitting that the opening cyberwarfare salvo in Ukraine 2022 war reached countries other than Ukraine (NATO).

So you want a TikTok ban? You will have to ban over 28 000 apps, including US/EU ones.

Privacy

Users who opt out from tracking still have their data processed. Interesting research, though unclear if authors draw appropriate conclusions with respect to data protection laws.

Privacy leaks in synthetic data uses. Is it a magical solution for privacy-preserving data processing? It is still based on private data (to offer some real-world statistical value, they have to!). Leaks still may happen, including deanonymisation attacks.

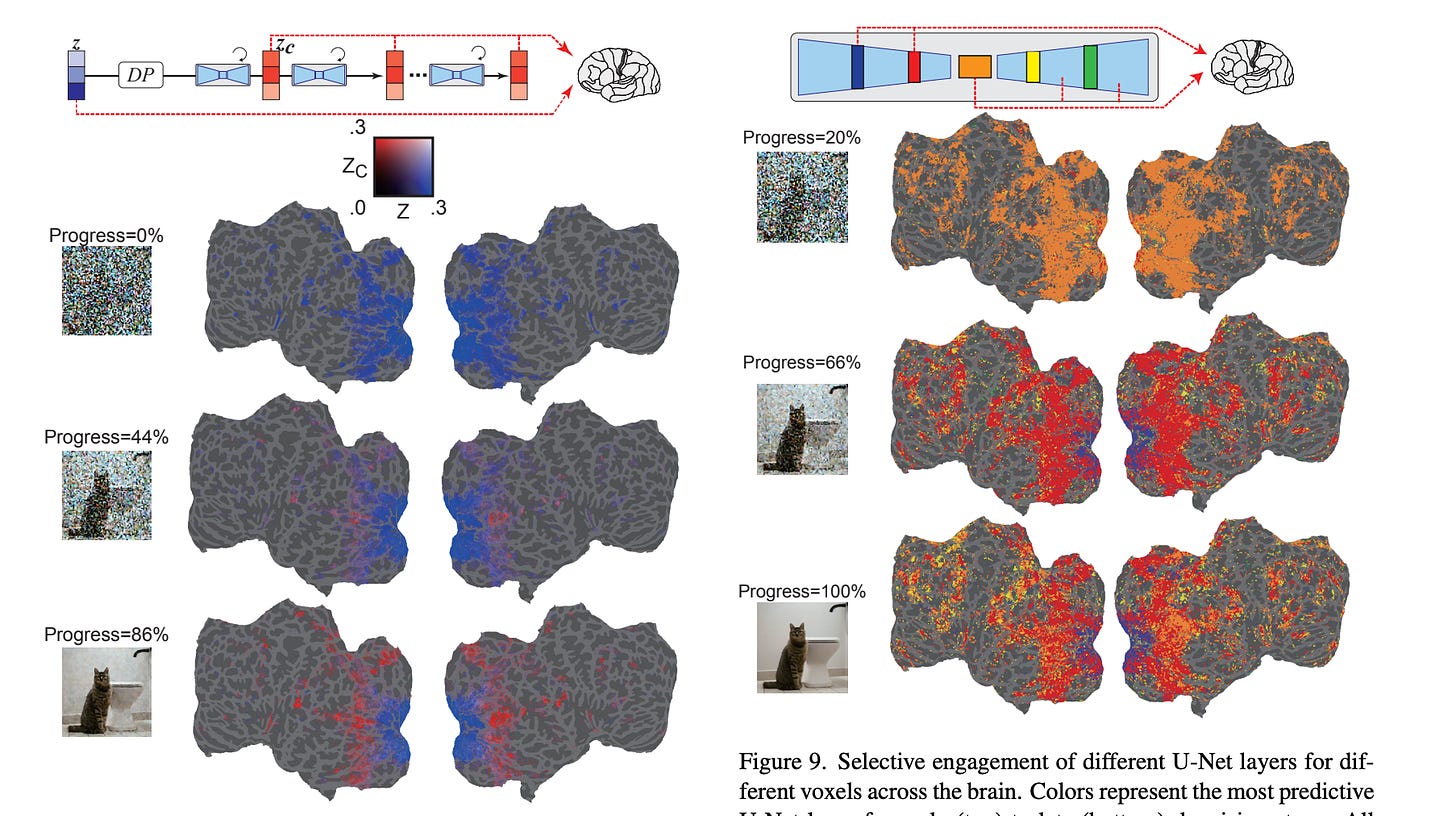

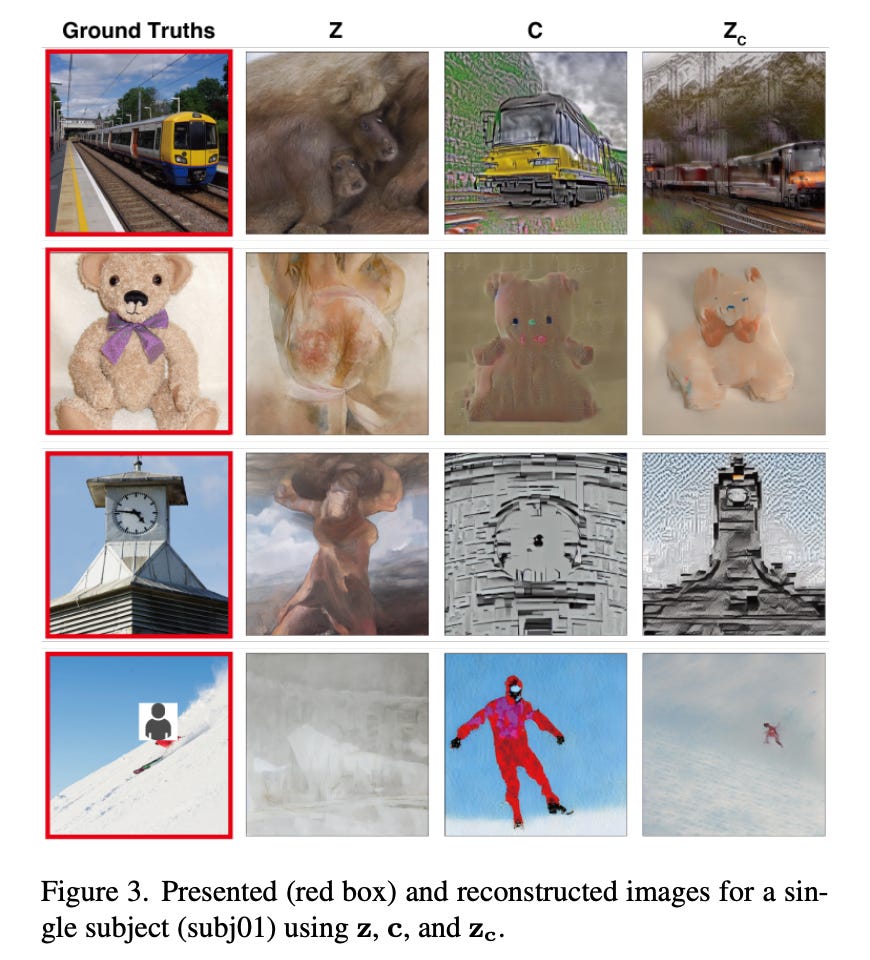

Querying data from brain. Reconstructing image experiences from human brain activity. Real images from brain signals using fMRI scan and some diffusion model ("AI" layer). Speaking of neural privacy, that should probably shock you sufficiently. Of course: not directly from the brain as it trains on specific data/images, but where this may or may not lead is left as an exercise to the reader.

Technology Policy

Other

In case you feel it's worth it to forward this content further:

If you’d like to share: