TechLetters Insights. Foreign Information Manipulation and Interference are here to stay.

“The information space has become a global battlefield.” So starts the third report on foreign information manipulation and interference (FIMI) in the words of Kaja Kallas, High Representative for external actions of EU Commission.

Between November 2023 and November 2024, a 505 foreign information manipulation and interference (FIMI) incidents were classified. These involved 38,000 channels across 25 platforms, targeting 90 countries, and 322 organisations, with >68,000 pieces of content recorded. The infrastructure behind these incidents spans official state media, covert networks, and state-aligned proxies, and unattributed channels. Information influence and operations use a layered and scalable system.

Russia remains the most active actor. Nearly half (257) of the incidents in the dataset targeted Ukraine. France (152 incidents), Germany (73), and Moldova (45) also faced sustained targeting. Russian campaigns are highly adaptable—tailored to local languages and contexts but aligned with consistent geopolitical goals. Germany and France were hit with localised campaigns. Ukraine, Moldova, Poland and the Baltic States are the key focus areas due to their geopolitical importance.

Content localisation was used in 349 incidents, adjusting narratives to match regional culture, language, and current events. Impersonation tactics were widespread: 124 cases involved faked (mirrored) sites designed to mimic legitimate media. 127 involved impersonation of institutions or public figures. The goal is amplification and erosion of trust. 73% of active FIMI channels (around 28,000 out of 38,000) were short-lived or disposable accounts—bot networks used for coordinated inauthentic behaviour. X platform accounted for 88% of observed activity. This makes sense - X is an opinion-shaping place.

Artificial Intelligence was used in at least 41 incidents. Its feature is the optimisation of generation of inauthentic content (deepfakes, synthetic audio) and automating large-scale dissemination. AI need not mean greater impact. The point is to lower the cost and boost output.

Operations targeting elections usually begin well before the vote and extend well beyond it. The goal is to erode trust in the process rather than back specific candidate names.

Such operations are not random trolling. They are well designed and executed influence campaigns. Their point is not to send individual messages but to run a long-term process. Only then propaganda may be successful.

The report makes clear that the situation is not just concerning. It is structurally dangerous. Foreign information manipulation has evolved into a strategic weapon, able to be integrated within broader hybrid operations. Russia stands out as the most aggressive actor, not just disrupting but potentially aiming to informationally disintegrate the EU. Through coordinated, multi-layered campaigns—combining official media, covert networks, manufactured content, and impersonation tactics—Russia seeks to polarise societies, erode institutional trust, and weaken democratic resilience from within. The scale, consistency, and adaptability of these operations suggest that Russia is acting as if its ultimate goal were nothing less than the informational dismantling (destroying) of the European Union itself.

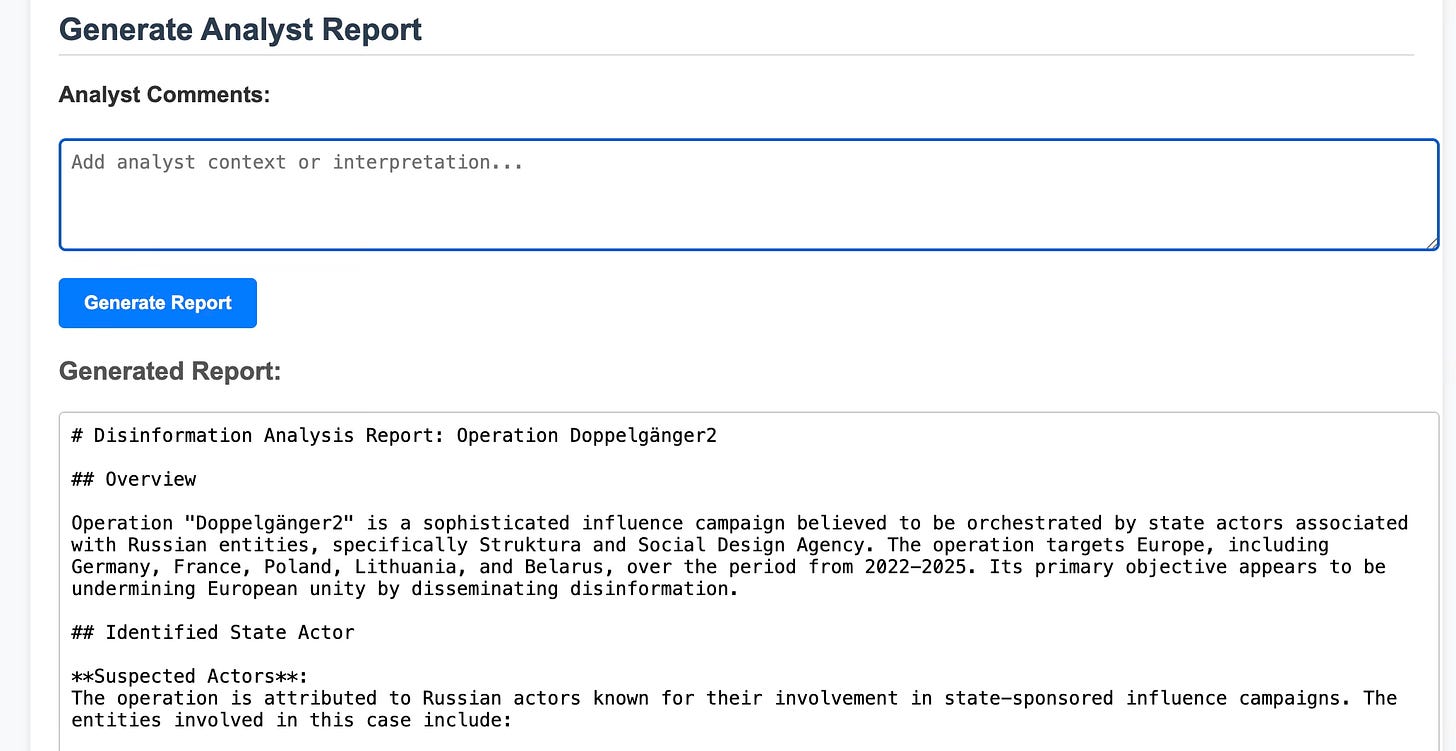

Oh, for a dose of fun I also craft a proof-of-concept demonstration of the EEAS-inspired Threat Info Matrix. Example use (based on Doppelgänger activities) below.